Data Engineering Master Class using AWS Analytics Services

Genre: eLearning | MP4 | Video: h264, 1280x720 | Audio: AAC, 44.1 KHz

Language: English | Size: 10.0 GB | Duration: 26h 15m

Build Data Engineering Pipelines using AWS Analytics Services such as Glue, EMR, Athena, Kinesis, Lambda, etc

What you'll learn

Data Engineering leveraging AWS Analytics features

AWS Essentials such as s3, IAM, EC2, etc

Understanding AWS s3 for cloud based storage

Understanding details related to virtual machines on AWS known as EC2

Managing AWS IAM users, groups, roles and policies for RBAC (Role Based Access Control)

Managing Tables using AWS Glue Catalog

Engineering Batch Data Pipelines using AWS Glue Jobs

Orchestrating Batch Data Pipelines using AWS Glue Workflows

Running Queries using AWS Athena - Server less query engine service

Using AWS Elastic Map Reduce (EMR) Clusters for building Data Pipelines

Using AWS Elastic Map Reduce (EMR) Clusters for reports and dashboards

Data Ingestion using AWS Lambda Functions

Scheduling using AWS Events Bridge

Engineering Streaming Pipelines using AWS Kinesis

Streaming Web Server logs using AWS Kinesis Firehose

Overview of data processing using AWS Athena

Running AWS Athena queries or commands using CLI

Running AWS Athena queries using Python boto3

Creating AWS Redshift Cluster, Create tables and perform CRUD Operations

Copy data from s3 to AWS Redshift Tables

Understanding Distribution Styles and creating tables using Distkeys

Running queries on external RDBMS Tables using AWS Redshift Federated Queries

Running queries on Glue or Athena Catalog tables using AWS Redshift Spectrum

Requirements

Programming experience using Python

Data Engineering experience using Spark

Ability to write and interpret SQL Queries

This course is ideal for experienced data engineers to add AWS Analytics Services as key skills to their profile

Description

Data Engineering is all about building Data Pipelines to get data from multiple sources into Data Lake or Data Warehouse and then from Data Lake or Data Warehouse to downstream systems. As part of this course, I will walk you through how to build Data Engineering Pipelines using AWS Analytics Stack. It includes services such as Glue, Elastic Map Reduce (EMR), Lambda Functions, Athena, EMR, Kinesis, and many more.

Here are the high-level steps which you will follow as part of the course.

Setup Development Environment

Getting Started with AWS

Storage - All about AWS s3 (Simple Storage Service)

User Level Security - Managing Users, Roles and Policies using IAM

Infrastructure - AWS EC2 (Elastic Cloud Compute)

Data Ingestion using AWS Lambda Functions

Development Life Cycle of Pyspark

Overview of AWS Glue Components

Setup Spark History Server for AWS Glue Jobs

Deep Dive into AWS Glue Catalog

Exploring AWS Glue Job APIs

AWS Glue Job Bookmarks

Getting Started with AWS EMR

Deploying Spark Applications using AWS EMR

Streaming Pipeline using AWS Kinesis

Consuming Data from AWS s3 using boto3 ingested using AWS Kinesis

Populating GitHub Data to AWS Dynamodb

Overview of Amazon AWS Athena

Amazon AWS Athena using AWS CLI

Amazon AWS Athena using Python boto3

Getting Started with Amazon AWS Redshift

Copy Data from AWS s3 into AWS Redshift Tables

Develop Applications using AWS Redshift Cluster

AWS Redshift Tables with Distkeys and Sortkeys

AWS Redshift Federated Queries and Spectrum

Here are the details about what you will be learning as part of this course. We will cover most of the commonly used services with hands on practice which are available under AWS Analytics.

Getting Started with AWS

As part of this section you will be going through the details related to getting started with AWS.

Introduction - AWS Getting Started

Create s3 Bucket

Create AWS IAM Group and AWS IAM User to have required access on s3 Bucket and other services

Overview of AWS IAM Roles

Create and Attach Custom AWS IAM Policy to both AWS IAM Groups as well as Users

Configure and Validate AWS CLI to access AWS Services using AWS CLI Commands

Storage - All about AWS s3 (Simple Storage Service)

AWS s3 is one of the most prominent fully managed AWS service. All IT Professionals who would like to work on AWS should be familiar about it. We will get into quite a few common features related to AWS s3 in this section.

Getting Started with AWS S3

Setup Data Set locally to upload to AWS s3

Adding AWS S3 Buckets and Managing Objects (files and folders) in AWS s3 buckents

Version Control for AWS S3 Buckets

Cross-Region Replication for AWS S3 Buckets

Overview of AWS S3 Storage Classes

Overview of AWS S3 Glacier

Managing AWS S3 using AWS CLI Commands

Managing Objects in AWS S3 using CLI - Lab

User Level Security - Managing Users, Roles, and Policies using IAM

Once you start working on AWS, you need to understand the permissions you have as a non admin user. As part of this section you will understand the details related to AWS IAM users, groups, roles as well as policies.

Creating AWS IAM Users

Logging into AWS Management Console using AWS IAM User

Validate Programmatic Access to AWS IAM User

AWS IAM Identity-based Policies

Managing AWS IAM Groups

Managing AWS IAM Roles

Overview of Custom AWS IAM Policies

Managing AWS IAM users, groups, roles as well as policies using AWS CLI Commands

Infrastructure - AWS EC2 (Elastic Cloud Compute) Basics

AWS EC2 Instances are nothing but virtual machines on AWS. As part of this section we will go through some of the basics related to AWS EC2 Basics.

Getting Started with AWS EC2

Create AWS EC2 Key Pair

Launch AWS EC2 Instance

Connecting to AWS EC2 Instance

AWS EC2 Security Groups Basics

AWS EC2 Public and Private IP Addresses

AWS EC2 Life Cycle

Allocating and Assigning AWS Elastic IP Address

Managing AWS EC2 Using AWS CLI

Upgrade or Downgrade AWS EC2 Instances

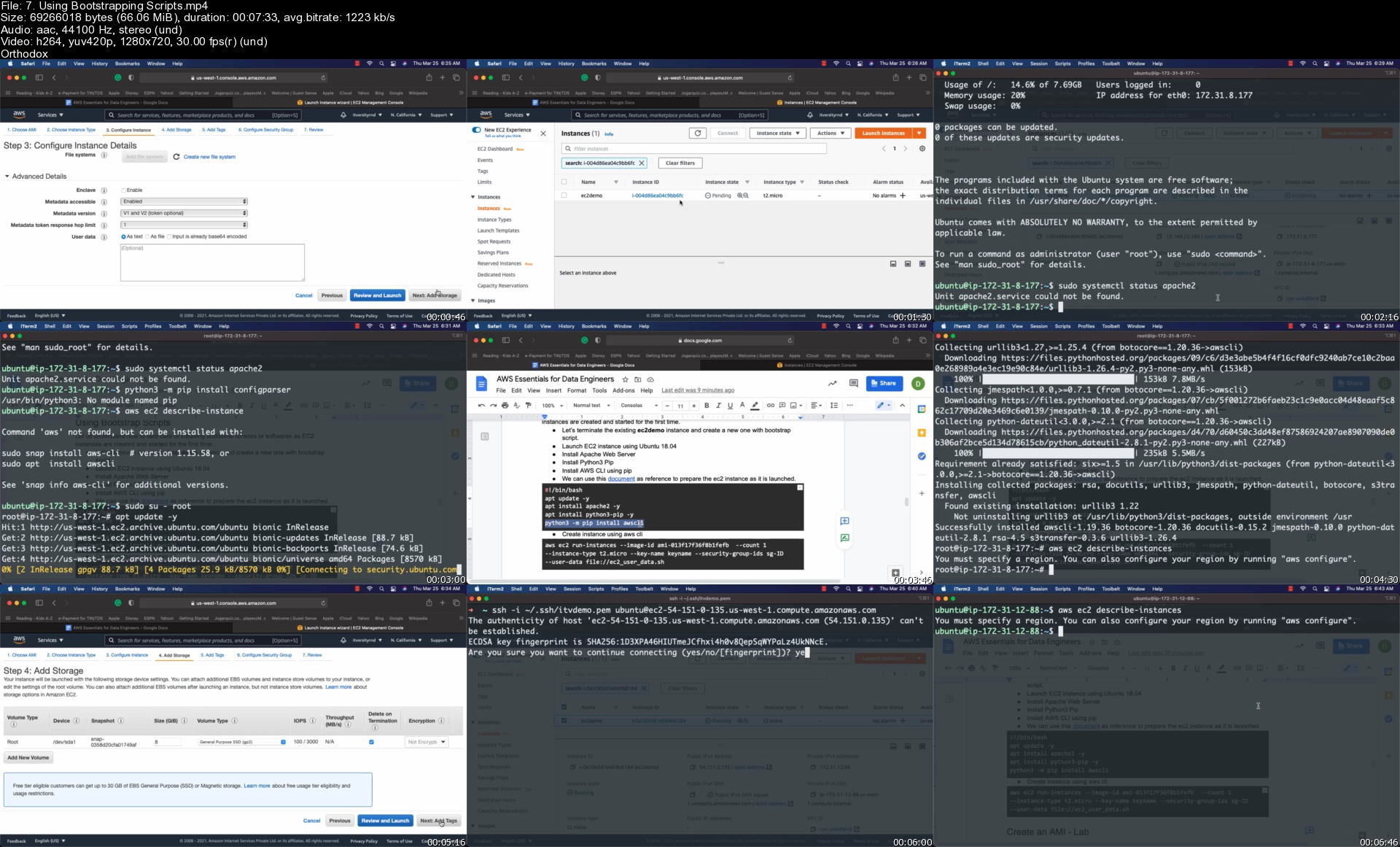

Infrastructure - AWS EC2 Advanced

In this section we will continue with AWS EC2 to understand how we can manage EC2 instances using AWS Commands and also how to install additional OS modules leveraging bootstrap scripts.

Getting Started with AWS EC2

Understanding AWS EC2 Metadata

Querying on AWS EC2 Metadata

Fitering on AWS EC2 Metadata

Using Bootstrapping Scripts with AWS EC2 Instances to install additional softwares on AWS EC2 instances

Create an AWS AMI using AWS EC2 Instances

Validate AWS AMI - Lab

Data Ingestion using Lambda Functions

AWS Lambda functions are nothing but serverless functions. In this section we will understand how we can develop and deploy Lambda functions using Python as programming language. We will also see how to maintain bookmark or checkpoint using s3.

Hello World using AWS Lambda

Setup Project for local development of AWS Lambda Functions

Deploy Project to AWS Lambda console

Develop download скачать functionality using requests for AWS Lambda Functions

Using 3rd party libraries in AWS Lambda Functions

Validating AWS s3 access for local development of AWS Lambda Functions

Develop upload functionality to s3 using AWS Lambda Functions

Validating AWS Lambda Functions using AWS Lambda Console

Run AWS Lambda Functions using AWS Lambda Console

Validating files incrementally downloaded using AWS Lambda Functions

Reading and Writing Bookmark to s3 using AWS Lambda Functions

Maintaining Bookmark on s3 using AWS Lambda Functions

Review the incremental upload logic developed using AWS Lambda Functions

Deploying AWS Lambda Functions

Schedule AWS Lambda Functions using AWS Event Bridge

Development Lifecycle for Pyspark

In this section, we will focus on development of Spark applications using Pyspark. We will use this application later while exploring EMR in detail.

Setup Virtual Environment and Install Pyspark

Getting Started with Pycharm

Passing Run Time Arguments

Accessing OS Environment Variables

Getting Started with Spark

Create Function for Spark Session

Setup Sample Data

Read data from files

Process data using Spark APIs

Write data to files

Validating Writing Data to Files

Productionizing the Code

Overview of AWS Glue Components

In this section we will get broad overview of all important Glue Components such as Glue Crawler, Glue Databases, Glue Tables, etc. We will also understand how to validate Glue tables using AWS Athena.

Introduction - Overview of AWS Glue Components

Create AWS Glue Crawler and AWS Glue Catalog Database as well as Table

Analyze Data using AWS Athena

Creating AWS S3 Bucket and Role to create AWS Glue Catalog Tables using Crawler on the s3 location

Create and Run the AWS Glue Job to process data in AWS Glue Catalog Tables

Validate using AWS Glue Catalog Table and by running queries using AWS Athena

Create and Run AWS Glue Trigger

Create AWS Glue Workflow

Run AWS Glue Workflow and Validate

Setup Spark History Server for AWS Glue Jobs

AWS Glue uses Apache Spark under the hood to process the data. It is important we setup Spark History Server for AWS Glue Jobs to troubleshoot any issues.

Introduction - Spark History Server for AWS Glue

Setup Spark History Server on AWS

Clone AWS Glue Samples repository

Build AWS Glue Spark UI Container

Update AWS IAM Policy Permissions

Start AWS Glue Spark UI Container

Deep Dive into AWS Glue Catalog

AWS Glue have several components, but the most important ones are nothing but AWS Glue Crawlers, Databases as well as Catalog Tables. In this section, we will go through some of the most important and commonly used features of AWS Glue Catalog.

Prerequisites for AWS Glue Catalog Tables

Steps for Creating AWS Glue Catalog Tables

download скачать Data Set to use to create AWS Glue Catalog Tables

Upload data to s3 to crawl using AWS Glue Crawler to create required AWS Glue Catalog Tables

Create AWS Glue Catalog Database - itvghlandingdb

Create AWS Glue Catalog Table - ghactivity

Running Queries using AWS Athena - ghactivity

Crawling Multiple Folders using AWS Glue Crawlers

Managing AWS Glue Catalog using AWS CLI

Managing AWS Glue Catalog using Python Boto3

Exploring AWS Glue Job APIs

Once we deploy AWS Glue jobs, we can manage them using AWS Glue Job APIs. In this section we will get overview of AWS Glue Job APIs to run and manage the jobs.

Update AWS IAM Role for AWS Glue Job

Generate baseline AWS Glue Job

Running baseline AWS Glue Job

AWS Glue Script for Partitioning Data

Validating using AWS Athena

Understanding AWS Glue Job Bookmarks

AWS Glue Job Bookmarks can be leveraged to maintain the bookmarks or checkpoints for incremental loads. In this section, we will go through the details related to AWS Glue Job Bookmarks.

Introduction to AWS Glue Job Boomarks

Cleaning up the data to run AWS Glue Jobs

Overview of AWS Glue CLI and Commands

Run AWS Glue Job using AWS Glue Bookmark

Validate AWS Glue Bookmark using AWS CLI

Add new data to landing zone to run AWS Glue Jobs using Bookmarks

Rerun AWS Glue Job using Bookmark

Validate AWS Glue Job Bookmark and Files for Incremental run

Recrawl the AWS Glue Catalog Table using AWS CLI Commands

Run AWS Athena Queries for Data Validation

Getting Started with AWS EMR

As part of this section we will understand how to get started with AWS EMR Cluster. We will primarily focus on AWS EMR Web Console.

Planning for AWS EMR Cluster

Create AWS EC2 Key Pair for AWS EMR Cluster

Setup AWS EMR Cluster with Apache Spark

Understanding Summary of AWS EMR Cluster

Review AWS EMR Cluster Application User Interfaces

Review AWS EMR Cluster Monitoring

Review AWS EMR Cluster Hardware and Cluster Scaling Policy

Review AWS EMR Cluster Configurations

Review AWS EMR Cluster Events

Review AWS EMR Cluster Steps

Review AWS EMR Cluster Bootstrap Actions

Connecting to AWS EMR Master Node using SSH

Disabling Termination Protection for AWS EMR Cluster and Terminating the AWS EMR Cluster

Clone and Create New AWS EMR Cluster

Listing AWS S3 Buckets and Objects using AWS CLI on AWS EMR Cluster

Listing AWS S3 Buckets and Objects using HDFS CLI on AWS EMR Cluster

Managing Files in AWS S3 using HDFS CLI on AWS EMR Cluster

Review AWS Glue Catalog Databases and Tables

Accessing AWS Glue Catalog Databases and Tables using AWS EMR Cluster

Accessing spark-sql CLI of AWS EMR Cluster

Accessing pyspark CLI of AWS EMR Cluster

Accessing spark-shell CLI of AWS EMR Cluster

Create AWS EMR Cluster for Notebooks

Deploying Spark Applications using AWS EMR

As part of this section we will understand how we typically deploy Spark Applications using AWS EMR. We will be using the Spark Application we have deployed earlier.

Deploying Applications using AWS EMR - Introduction

Setup AWS EMR Cluster to deploy applications

Validate SSH Connectivity to Master node of AWS EMR Cluster

Setup Jupyter Notebook Environment on AWS EMR Cluster

Create required AWS s3 Bucket for AWS EMR Cluster

Upload GHActivity Data to s3 so that we can process using Spark Application deployed on AWS EMR Cluster

Validate Application using AWS EMR Compatible Versions of Python and Spark

Deploy Spark Application to AWS EMR Master Node

Create user space for ec2-user on AWS EMR Cluster

Run Spark Application using spark-submit on AWS EMR Master Node

Validate Data using Jupyter Notebooks on AWS EMR Cluster

Clone and Start Auto Terminated AWS EMR Cluster

Delete Data Populated by GHAcitivity Application using AWS EMR Cluster

Differences between Spark Client and Cluster Deployment Modes on AWS EMR Cluster

Running Spark Application using Cluster Mode on AWS EMR Cluster

Overview of Adding Pyspark Application as Step to AWS EMR Cluster

Deploy Spark Application to AWS S3 to run using AWS EMR Steps

Running Spark Applications as AWS EMR Steps in client mode

Running Spark Applications as AWS EMR Steps in cluster mode

Validate AWS EMR Step Execution of Spark Application

Streaming Data Ingestion Pipeline using AWS Kinesis

As part of this section we will go through details related to streaming data ingestion pipeline using AWS Kinesis. We will use AWS Kinesis Firehose Agent and AWS Kinesis Delivery Stream to read the data from log files and ingest into AWS s3.

Building Streaming Pipeline using AWS Kinesis Firehose Agent and Delivery Stream

Rotating Logs so that the files are created frequently which will be eventually ingested using AWS Kinesis Firehose Agent and AWS Kinesis Firehose Delivery Stream

Setup AWS Kinesis Firehose Agent to get data from logs into AWS Kinesis Delivery Stream.

Create AWS Kinesis Firehose Delivery Stream

Planning the Pipeline to ingest data into s3 using AWS Kinesis Delivery Stream

Create AWS IAM Group and User for Streaming Pipelins using AWS Kinesis Components

Granting Permissions to AWS IAM User using Policy for Streaming Pipelins using AWS Kinesis Components

Configure AWS Kinesis Firehose Agent to read the data from log files and ingest into AWS Kinesis Firehose Delivery Stream.

Start and Validate AWS Kinesis Firehose Agent

Conclusion - Building Simple Steaming Pipeline using AWS Kinesis Firehose

Consuming Data from AWS s3 using Python boto3 ingested using AWS Kinesis

As data is ingested into AWS S3, we will understand how data can ingested in AWS s3 can be processed using boto3.

Customizing AWS s3 folder using AWS Kinesis Delivery Stream

Create AWS IAM Policy to read from AWS s3 Bucket

Validate AWS s3 access using AWS CLI

Setup Python Virtual Environment to explore boto3

Validating access to AWS s3 using Python boto3

Read Content from AWS s3 object

Read multiple AWS s3 Objects

Get number of AWS s3 Objects using Marker

Get size of AWS s3 Objects using Marker

Populating GitHub Data to AWS Dynamodb

As part of this section we will understand how we can populate data to AWS Dynamodb tables using Python as programming language.

Install required libraries to get GitHub Data to AWS Dynamodb tables.

Understanding GitHub APIs

Setting up GitHub API Token

Understanding GitHub Rate Limit

Create New Repository for since

Extracting Required Information using Python

Processing Data using Python

Grant Permissions to create AWS dynamodb tables using boto3

Create AWS Dynamodb Tables

AWS Dynamodb CRUD Operations

Populate AWS Dynamodb Table

AWS Dynamodb Batch Operations

Overview of Amazon AWS Athena

As part of this section we will understand how to get started with AWS Athena using AWS Webconsole. We will also focus on basic DDL and DML or CRUD Operations using AWS Athena Query Editor.

Getting Started with Amazon AWS Athena

Quick Recap of AWS Glue Catalog Databases and Tables

Access AWS Glue Catalog Databases and Tables using AWS Athena Query Editor

Create Database and Table using AWS Athena

Populate Data into Table using AWS Athena

Using CTAS to create tables using AWS Athena

Overview of Amazon AWS Athena Architecture

Amazon AWS Athena Resources and relationship with Hive

Create Partitioned Table using AWS Athena

Develop Query for Partitioned Column

Insert into Partitioned Tables using AWS Athena

Validate Data Partitioning using AWS Athena

Drop AWS Athena Tables and Delete Data Files

Drop Partitioned Table using AWS Athena

Data Partitioning in AWS Athena using CTAS

Amazon AWS Athena using AWS CLI

As part of this section we will understand how to interact with AWS Athena using AWS CLI Commands.

Amazon AWS Athena using AWS CLI - Introduction

Get help and list AWS Athena databases using AWS CLI

Managing AWS Athena Workgroups using AWS CLI

Run AWS Athena Queries using AWS CLI

Get AWS Athena Table Metadata using AWS CLI

Run AWS Athena Queries with custom location using AWS CLI

Drop AWS Athena table using AWS CLI

Run CTAS under AWS Athena using AWS CLI

Amazon AWS Athena using Python boto3

As part of this section we will understand how to interact with AWS Athena using Python boto3.

Amazon AWS Athena using Python boto3 - Introduction

Getting Started with Managing AWS Athena using Python boto3

List Amazon AWS Athena Databases using Python boto3

List Amazon AWS Athena Tables using Python boto3

Run Amazon AWS Athena Queries with boto3

Review AWS Athena Query Results using boto3

Persist Amazon AWS Athena Query Results in Custom Location using boto3

Processing AWS Athena Query Results using Pandas

Run CTAS against Amazon AWS Athena using Python boto3

Getting Started with Amazon AWS Redshift

As part of this section we will understand how to get started with AWS Redshift using AWS Webconsole. We will also focus on basic DDL and DML or CRUD Operations using AWS Redshift Query Editor.

Getting Started with Amazon AWS Redshift - Introduction

Create AWS Redshift Cluster using Free Trial

Connecting to Database using AWS Redshift Query Editor

Get list of tables querying information schema

Run Queries against AWS Redshift Tables using Query Editor

Create AWS Redshift Table using Primary Key

Insert Data into AWS Redshift Tables

Update Data in AWS Redshift Tables

Delete data from AWS Redshift tables

Redshift Saved Queries using Query Editor

Deleting AWS Redshift Cluster

Restore AWS Redshift Cluster from Snapshot

Copy Data from s3 into AWS Redshift Tables

As part of this section we will go through the details about copying data from s3 into AWS Redshift tables using AWS Redshift Copy command.

Copy Data from s3 to AWS Redshift - Introduction

Setup Data in s3 for AWS Redshift Copy

Copy Database and Table for AWS Redshift Copy Command

Create IAM User with full access on s3 for AWS Redshift Copy

Run Copy Command to copy data from s3 to AWS Redshift Table

Troubleshoot Errors related to AWS Redshift Copy Command

Run Copy Command to copy from s3 to AWS Redshift table

Validate using queries against AWS Redshift Table

Overview of AWS Redshift Copy Command

Create IAM Role for AWS Redshift to access s3

Copy Data from s3 to AWS Redshift table using IAM Role

Setup JSON Dataset in s3 for AWS Redshift Copy Command

Copy JSON Data from s3 to AWS Redshift table using IAM Role

Develop Applications using AWS Redshift Cluster

As part of this section we will understand how to develop applications against databases and tables created as part of AWS Redshift Cluster.

Develop application using AWS Redshift Cluster - Introduction

Allocate Elastic Ip for AWS Redshift Cluster

Enable Public Accessibility for AWS Redshift Cluster

Update Inbound Rules in Security Group to access AWS Redshift Cluster

Create Database and User in AWS Redshift Cluster

Connect to database in AWS Redshift using psql

Change Owner on AWS Redshift Tables

download скачать AWS Redshift JDBC Jar file

Connect to AWS Redshift Databases using IDEs such as SQL Workbench

Setup Python Virtual Environment for AWS Redshift

Run Simple Query against AWS Redshift Database Table using Python

Truncate AWS Redshift Table using Python

Create IAM User to copy from s3 to AWS Redshift Tables

Validate Access of IAM User using Boto3

Run AWS Redshift Copy Command using Python

AWS Redshift Tables with Distkeys and Sortkeys

As part of this section we will go through AWS Redshift specific features such as distribution keys and sort keys to create AWS Redshift tables.

AWS Redshift Tables with Distkeys and Sortkeys - Introduction

Quick Review of AWS Redshift Architecture

Create multi-node AWS Redshift Cluster

Connect to AWS Redshift Cluster using Query Editor

Create AWS Redshift Database

Create AWS Redshift Database User

Create AWS Redshift Database Schema

Default Distribution Style of AWS Redshift Table

Grant Select Permissions on Catalog to AWS Redshift Database User

Update Search Path to query AWS Redshift system tables

Validate AWS Redshift table with DISTSTYLE AUTO

Create AWS Redshift Cluster from Snapshot to the original state

Overview of Node Slices in AWS Redshift Cluster

Overview of Distribution Styles related to AWS Redshift tables

Distribution Strategies for retail tables in AWS Redshift Databases

Create AWS Redshift tables with distribution style all

Troubleshoot and Fix Load or Copy Errors

Create AWS Redshift Table with Distribution Style Auto

Create AWS Redshift Tables using Distribution Style Key

Delete AWS Redshift Cluster with manual snapshot

AWS Redshift Federated Queries and Spectrum

As part of this section we will go through some of the advanced features of Redshift such as AWS Redshift Federated Queries and AWS Redshift Spectrum.

AWS Redshift Federated Queries and Spectrum - Introduction

Overview of integrating AWS RDS and AWS Redshift for Federated Queries

Create IAM Role for AWS Redshift Cluster

Setup Postgres Database Server for AWS Redshift Federated Queries

Create tables in Postgres Database for AWS Redshift Federated Queries

Creating Secret using Secrets Manager for Postgres Database

Accessing Secret Details using Python Boto3

Reading Json Data to Dataframe using Pandas

Write JSON Data to AWS Redshift Database Tables using Pandas

Create AWS IAM Policy for Secret and associate with Redshift Role

Create AWS Redshift Cluster using AWS IAM Role with permissions on secret

Create AWS Redshift External Schema to Postgres Database

Update AWS Redshift Cluster Network Settings for Federated Queries

Performing ETL using AWS Redshift Federated Queries

Clean up resources added for AWS Redshift Federated Queries

Grant Access on AWS Glue Data Catalog to AWS Redshift Cluster for Spectrum

Setup AWS Redshift Clusters to run queries using Spectrum

Quick Recap of AWS Glue Catalog Database and Tables for AWS Redshift Spectrum

Create External Schema using AWS Redshift Spectrum

Run Queries using AWS Redshift Spectrum

Cleanup the AWS Redshift Cluster

Who this course is for

Beginner or Intermediate Data Engineers who want to learn AWS Analytics Services for Data Engineering

Intermediate Application Engineers who want to explore Data Engineering using AWS Analytics Services

Data and Analytics Engineers who want to learn Data Engineering using AWS Analytics Services

Testers who want to learn Databricks to test Data Engineering applications built using AWS Analytics Services

Homepage

https://anonymz.com/?https://www.udemy.com/course/data-engineering-using-aws-analytics-services

https://nitro.download скачать/view/EB1E82BCA635D0B/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part01.rar https://nitro.download скачать/view/0C5356B58E8A74B/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part02.rar https://nitro.download скачать/view/134573A9F14F49C/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part03.rar https://nitro.download скачать/view/EFBC633B5414E43/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part04.rar https://nitro.download скачать/view/06B3E92335016B5/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part05.rar https://nitro.download скачать/view/DB50B290AE53517/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part06.rar https://nitro.download скачать/view/DC1A0252716D6F5/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part07.rar https://nitro.download скачать/view/E1105AEA39AE5FC/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part08.rar https://nitro.download скачать/view/C4FBA67082D4D32/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part09.rar https://nitro.download скачать/view/AA1AED2177DF6C8/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part10.rar https://nitro.download скачать/view/992D131FAEE8C06/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part11.rar

https://rapidgator.net/file/a20bd3f8f338ef8eb16a5854f9dbaebd/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part01.rar.html https://rapidgator.net/file/a62582384327fd0378a5773710be4126/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part02.rar.html https://rapidgator.net/file/86a0a05d0ff00f96aac41c571dd264e4/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part03.rar.html https://rapidgator.net/file/d827ae29962dd168376b0b8af521c9b8/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part04.rar.html https://rapidgator.net/file/6be5aba3b29979e080fa4c0395dc19ad/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part05.rar.html https://rapidgator.net/file/0c16000468043445cdad44fdcbdd7878/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part06.rar.html https://rapidgator.net/file/dc3dbb6548fdf1e314f7ccb98275b6ee/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part07.rar.html https://rapidgator.net/file/e0f5924cb0b3be9d88d6fbd9cd3c9c44/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part08.rar.html https://rapidgator.net/file/ff740bf7269bed8d99d411880969115f/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part09.rar.html https://rapidgator.net/file/16ea212afb1b7[цензура]746580206c84e40e/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part10.rar.html https://rapidgator.net/file/6d87c48279e2f048e21c96afd11ff4bb/Data_Engineering_Master_Class_using_AWS_Analytics_Services.part11.rar.html