Mastering Ollama: Build Private Local Llm Apps With Python

Published 10/2024

MP4 | Video: h264, 1920x1080 | Audio: AAC, 44.1 KHz

Language: English | Size: 1.99 GB | Duration: 3h 19m

Run custom Ollama LLMs privately on your system-Use ChatGPT-like UI-Hands-on projects-No cloud or extra costs required

[b]What you'll learn[/b]

Install and configure Ollama on your local system to run large language models privately.

Customize LLM models to suit specific needs using Ollama's options and command-line tools.

Execute all terminal commands necessary to control, monitor, and troubleshoot Ollama models.

Set up and manage a ChatGPT-like interface, allowing you to interact with models locally.

Utilize different model types-including text, vision, and code-generating models-for various applications.

Create custom LLM models from a Modelfile file and integrate them into your applications.

Build Python applications that interface with Ollama models using its native library and OpenAI API compatibility.

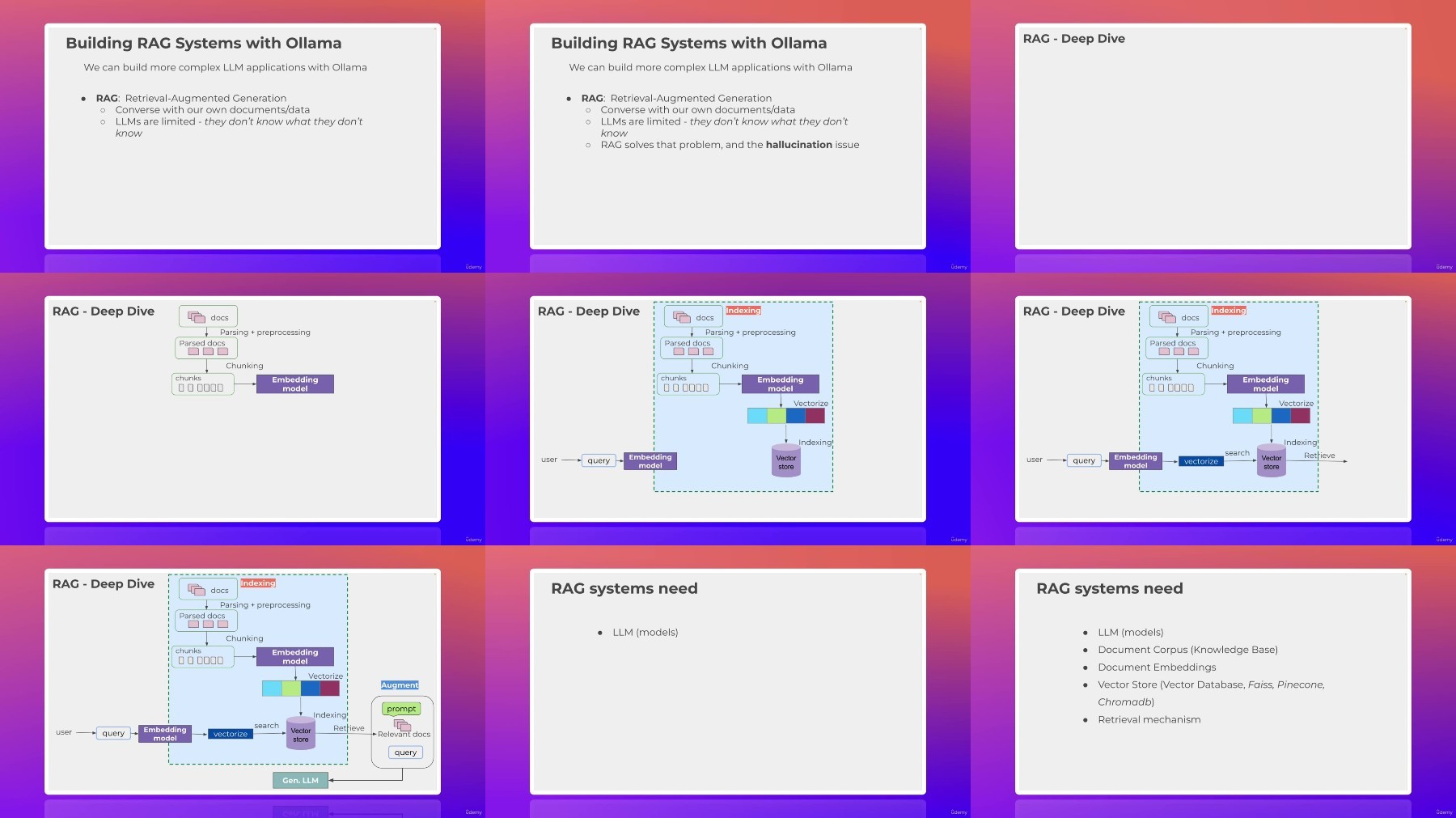

Develop Retrieval-Augmented Generation (RAG) applications by integrating Ollama models with LangChain.

Implement tools and function calling to enhance model interactions for advanced workflows.

Set up a user-friendly UI frontend to allow users to interface and chat with different Ollama models.

[b]Requirements[/b]

Basic Python Programming Knowledge

Comfort with Command Line Interface (CLI)

[b]Description[/b]

Are you concerned about data privacy and the high costs associated with using Large Language Models (LLMs)? If so, this course is the perfect fit for you. "Mastering Ollama: Build Private LLM Applications with Python" empowers you to run powerful AI models directly on your own system, ensuring complete data privacy and eliminating the need for expensive cloud services. By learning to deploy and customize local LLMs with Ollama, you'll maintain full control over your data and applications while avoiding the ongoing expenses and potential risks of cloud-based solutions.This hands-on course will take you from beginner to expert in using Ollama, a platform designed for running local LLM models. You'll learn how to set up and customize models, create a ChatGPT-like interface, and build private applications using Python-all from the comfort of your system.In this course, you will:Install and configure Ollama for local LLM model execution.Customize LLM models to suit your specific needs using Ollama's tools.Master command-line tools to control, monitor, and troubleshoot Ollama models.Integrate various models, including text, vision, and code-generating models, and even create your custom models.Build Python applications that interface with Ollama models using its native library and OpenAI API compatibility.Develop Retrieval-Augmented Generation (RAG) applications by integrating Ollama models with LangChain.Implement tools and function calling to enhance model interactions in terminal and LangChain environments.Set up a user-friendly UI frontend to allow users to chat with different Ollama models.Why is this course important?In a world where data privacy is growing, running LLMs locally ensures your data stays on your machine. This enhances data security and allows you to customize models for specialized tasks without external dependencies or additional costs.You'll engage in practical activities like building custom models, developing RAG applications that retrieve and respond to user queries based on your data, and creating interactive interfaces. Each section has real-world applications to give you the experience and confidence to build your local LLM solutions.Why choose this course?This course is uniquely crafted to make advanced AI concepts approachable and actionable. We focus on practical, hands-on learning, enabling you to build real-world solutions from day one. You'll dive deep into projects that bridge theory and practice, ensuring you gain tangible skills in developing local LLM applications. Whether you're new to large language models or seeking to enhance your existing abilities, this course provides all the guidance and tools you need to create private AI applications using Ollama and Python confidently.Ready to develop powerful AI applications while keeping your data completely private? Enroll today and seize full control of your AI journey with Ollama. Harness the capabilities of local LLMs on your own system and take your skills to the next level!

Overview

Section 1: Introduction

Lecture 1 Introduction & What Will Your Learn

Lecture 2 Course Prerequisites

Lecture 3 Please WATCH this DEMO

Section 2: Development Environment Setup

Lecture 4 Development Environment Setup

Lecture 5 Udemy 101 - Tips for A Better Learning Experience

Section 3: download скачать Code and Resources

Lecture 6 How to Get Source Code

Lecture 7 download скачать Source code and Resources

Section 4: Ollama Deep Dive - Introduction to Ollama and Setup

Lecture 8 Ollama Deep Dive - Ollama Overview - What is Ollama and Advantages

Lecture 9 Ollama Key Features and Use Cases

Lecture 10 System Requirements & Ollama Setup - Overview

Lecture 11 download скачать and Setup Ollama and Llam3.2 Model - Hands-on & Testing

Lecture 12 Ollama Models Page - Full Overview

Lecture 13 Ollama Model Parameters Deep Dive

Lecture 14 Understanding Parameters and Disk Size and Computational Resources Needed

Section 5: Ollama CLI Commands and the REST API - Hands-on

Lecture 15 Ollama Commands - Pull and Testing a Model

Lecture 16 Pull in the Llava Multimodal Model and Caption an Image

Lecture 17 Summarization and Sentiment Analysis & Customizing Our Model with the Modelfile

Lecture 18 Ollama REST API - Generate and Chat Endpoints

Lecture 19 Ollama REST API - Request JSON Mode

Lecture 20 Ollama Models Support Different Tasks - Summary

Section 6: Ollama - User Interfaces for Ollama Models

Lecture 21 Different Ways to Interact with Ollama Models - Overview

Lecture 22 Ollama Model Running Under Msty App - Frontend Tool - RAG System Chat with Docs

Section 7: Ollama Python Library - Using Python to Interact with Ollama Models

Lecture 23 The Ollama Python Library for Building LLM Local Applications - Overview

Lecture 24 Interact with Llama3 in Python Using Ollama REST API - Hands-on

Lecture 25 Ollama Python Library - Chatting with a Model

Lecture 26 Chat Example with Streaming

Lecture 27 Using Ollama show Function

Lecture 28 Create a Custom Model in Code

Section 8: Ollama Building LLM Applications with Ollama Models

Lecture 29 Hands-on: Build a LLM App - Grocery List Categorizer

Lecture 30 Building RAG Systems with Ollama - RAG & LangChain Overview

Lecture 31 Deep Dive into Vectorstore and Embeddings - The Whole Picture - Crash course

Lecture 32 PDF RAG System Overview - What we'll Build

Lecture 33 Setup RAG System - Document Ingestion & Vector Database Creation and Embeddings

Lecture 34 RAG System - Retrieval and Querying

Lecture 35 RAG System - Cleaner Code

Lecture 36 RAG System - Streamlit UI

Section 9: Ollama Tool Function Calling - Hands-on

Lecture 37 Function Calling (Tools) Overview

Lecture 38 Setup Tool Function Calling Application

Lecture 39 Categorize Items Using the Model and Setup the Tools List

Lecture 40 Tools Calling LLM Application - Final Product

Section 10: Final RAG System with Ollama and Voice Response

Lecture 41 Voice RAG System - Overview

Lecture 42 Setup EleveLabs API Key and Load and Summarize the Document

Lecture 43 Ollama Voice RAG System - Working!

Lecture 44 Adding ElevenLab Voice Generated Reading the Response Back to Us

Section 11: Wrap up

Lecture 45 Wrap up - What's Next?

Python Developers looking to expand their skill set by integrating Large Language Models (LLMs) into their applications.,AI Enthusiasts and Practitioners interested in running and customizing local LLMs privately without relying on cloud services.,Data Scientists and Machine Learning Engineers who want to understand and implement local AI models using Ollama and LangChain.,Software Engineers aiming to develop secure AI applications on their own systems, maintaining full control over data and infrastructure.,Students and Researchers exploring the capabilities of local LLMs and seeking hands-on experience with advanced AI technologies.,Professionals Concerned with Data Privacy who need to process sensitive information without sending data to external servers or cloud platforms.,Anyone Interested in Building ChatGPT-like Applications Locally, and wants to gain practical experience through real-world projects.,Beginners to LLMs and Ollama who have basic Python knowledge and are eager to learn about AI application development.,Beginners to LLMs and Ollama who have basic Python knowledge and are eager to learn about AI application development.,Educators and Trainers seeking to incorporate AI and LLMs into their curriculum or training programs without relying on external services.

Fikper

https://fikper.com/mO4aRqtLp8/Mastering.Ollama.Build.Private.Local.LLM.Apps.with.Python.part1.rar.html https://fikper.com/cUnqajhX67/Mastering.Ollama.Build.Private.Local.LLM.Apps.with.Python.part2.rar.html

FileAxa

https://fileaxa.com/hbxlbf8v9az0/Mastering.Ollama.Build.Private.Local.LLM.Apps.with.Python.part1.rar https://fileaxa.com/821imolejlrz/Mastering.Ollama.Build.Private.Local.LLM.Apps.with.Python.part2.rar

RapidGator

https://rapidgator.net/file/ec7c14b76f2b68628dedee0f389bef18/Mastering.Ollama.Build.Private.Local.LLM.Apps.with.Python.part1.rar https://rapidgator.net/file/e19feccd1941ef3291ae130324777563/Mastering.Ollama.Build.Private.Local.LLM.Apps.with.Python.part2.rar

FileStore

TurboBit

https://turbobit.net/li6pbzve9eds/Mastering.Ollama.Build.Private.Local.LLM.Apps.with.Python.part1.rar.html https://turbobit.net/wsmp06tj32vz/Mastering.Ollama.Build.Private.Local.LLM.Apps.with.Python.part2.rar.html