A deep understanding of AI large language model mechanisms

Published 8/2025

Created by Mike X Cohen

MP4 | Video: h264, 1280x720 | Audio: AAC, 44.1 KHz, 2 Ch

Level: All | Genre: eLearning | Language: English | Duration: 328 Lectures ( 93h 8m ) | Size: 67 GB

Build and train LLM NLP transformers and attention mechanisms (PyTorch). Explore with mechanistic interpretability tools

What you'll learn

Large language model (LLM) architectures, including GPT (OpenAI) and BERT

Transformer blocks

Attention algorithm

Pytorch

LLM pretraining

Explainable AI

Mechanistic interpretability

Machine learning

Deep learning

Principal components analysis

High-dimensional clustering

Dimension reduction

Advanced cosine similarity applications

Requirements

Motivation to learn about large language models and AI

Experience with coding is helpful but not necessary

Familiarity with machine learning is helpful but not necessary

Basic linear algebra is helpful

Deep learning, including gradient descent, is helpful but not necessary

Description

Deep Understanding of Large Language Models (LLMs): Architecture, Training, and Mechanisms

Large Language Models (LLMs) like ChatGPT, GPT-4, , GPT5, Claude, Gemini, and LLaMA are transforming artificial intelligence, natural language processing (NLP), and machine learning. But most courses only teach you how to use LLMs. This 90+ hour intensive course teaches you how they actually work - and how to dissect them using machine-learning and mechanistic interpretability methods.

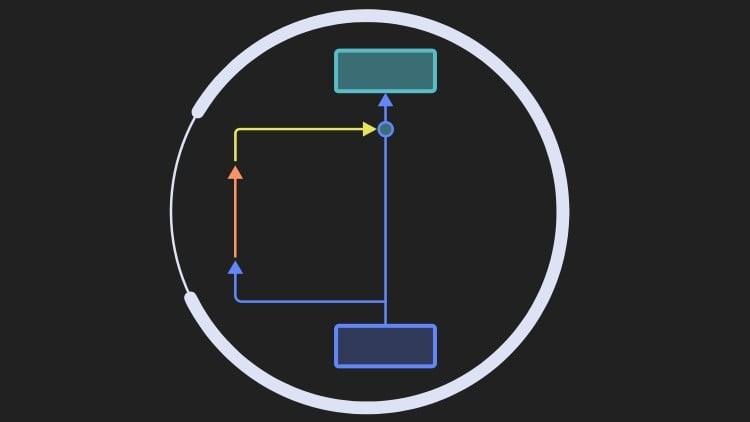

This is a deep, end-to-end exploration of transformer architectures, self-attention mechanisms, embeddings layers, training pipelines, and inference strategies - with hands-on Python and PyTorch code at every step.

Whether your goal is to build your own transformer from scratch, fine-tune existing models, or understand the mathematics and engineering behind state-of-the-art generative AI, this course will give you the foundation and tools you need.

What You'll Learn

The complete architecture of LLMs - tokenization, embeddings, encoders, decoders, attention heads, feedforward networks, and layer normalization

Mathematics of attention mechanisms - dot-product attention, multi-head attention, positional encoding, causal masking, probabilistic token selection

Training LLMs - optimization (Adam, AdamW), loss functions, gradient accumulation, batch processing, learning-rate schedulers, regularization (L1, L2, decorrelation), gradient clipping

Fine-tuning and prompt engineering for downstream NLP tasks, system-tuning

Evaluation metrics - perplexity, accuracy, and benchmark datasets such as MAUVE, HellaSwag, SuperGLUE, and ways to assess bias and fairness

Practical PyTorch implementations of transformers, attention layers, and language model training loops, custom classes, custom loss functions

Inference techniques - greedy decoding, beam search, top-k sampling, temperature scaling

Scaling laws and trade-offs between model size, training data, and performance

Limitations and biases in LLMs - interpretability, ethical considerations, and responsible AI

Decoder-only transformers

Embeddings, including token embeddings and positional embeddings

Sampling techniques - methods for generating new text, including top-p, top-k, multinomial, and greedy

Why This Course Is Different

93+ hours of HD video lectures - blending theory, code, and practical application

Code challenges in every section - with full, downloadable solutions

Builds from first principles - starting from basic Python/Numpy implementations and progressing to full PyTorch LLMs

Suitable for researchers, engineers, and advanced learners who want to go beyond "black box" API usage

Clear explanations without dumbing down the content - intensive but approachable

Who Is This Course For?

Machine learning engineers and data scientists

AI researchers and NLP specialists

Software developers interested in deep learning and generative AI

Graduate students or self-learners with intermediate Python skills and basic ML knowledge

Technologies & Tools Covered

Python and PyTorch for deep learning

NumPy and Matplotlib for numerical computing and visualization

Google Colab for free GPU access

Hugging Face Transformers for working with pre-trained models

Tokenizers and text preprocessing tools

Implement Transformers in PyTorch, fine-tune LLMs, decode with attention mechanisms, and probe model internals

What if you have questions about the material?

This course has a Q&A (question and answer) section where you can post your questions about the course material (about the maths, statistics, coding, or machine learning aspects). I try to answer all questions within a day. You can also see all other questions and answers, which really improves how much you can learn! And you can contribute to the Q&A by posting to ongoing discussions.

By the end of this course, you won't just know how to work with LLMs - you'll understand why they work the way they do, and be able to design, train, evaluate, and deploy your own transformer-based language models.

Enroll now and start mastering Large Language Models from the ground up.

Who this course is for

AI engineers

Scientists interested in modern autoregressive modeling

Natural language processing enthusiasts

Students in a machine-learning or data science course

Graduate students or self-learners

Undergraduates interested in large language models

Machine-learning or data science practitioners

Researchers in explainable AI

Homepage

https://anonymz.com/?https://www.udemy.com/course/dullms_x/

https://nitroflare.com/view/04B1373FB657E7F/A_deep_understanding_of_AI_large_language_model_mechanisms.part01.rar https://nitroflare.com/view/54EBA55CFAEF13A/A_deep_understanding_of_AI_large_language_model_mechanisms.part02.rar https://nitroflare.com/view/64E77BC450B88EB/A_deep_understanding_of_AI_large_language_model_mechanisms.part03.rar https://nitroflare.com/view/25BCE60CEF8E8C3/A_deep_understanding_of_AI_large_language_model_mechanisms.part04.rar https://nitroflare.com/view/7FD6976B41A5A1F/A_deep_understanding_of_AI_large_language_model_mechanisms.part05.rar https://nitroflare.com/view/B5FCF07F815217B/A_deep_understanding_of_AI_large_language_model_mechanisms.part06.rar https://nitroflare.com/view/CEBB4E02C5E5D9C/A_deep_understanding_of_AI_large_language_model_mechanisms.part07.rar https://nitroflare.com/view/6B5D8B9DCFDF03E/A_deep_understanding_of_AI_large_language_model_mechanisms.part08.rar https://nitroflare.com/view/78316787E26DD09/A_deep_understanding_of_AI_large_language_model_mechanisms.part09.rar https://nitroflare.com/view/811A95148A624F3/A_deep_understanding_of_AI_large_language_model_mechanisms.part10.rar https://nitroflare.com/view/9876CDD70DBB34A/A_deep_understanding_of_AI_large_language_model_mechanisms.part11.rar https://nitroflare.com/view/ECF6D340EDB3268/A_deep_understanding_of_AI_large_language_model_mechanisms.part12.rar https://nitroflare.com/view/3DCF3B832E0324E/A_deep_understanding_of_AI_large_language_model_mechanisms.part13.rar https://nitroflare.com/view/7C92537B2FAA56F/A_deep_understanding_of_AI_large_language_model_mechanisms.part14.rar

https://rapidgator.net/file/9ff77883c15ded4f7f204499d13c37b9/A_deep_understanding_of_AI_large_language_model_mechanisms.part01.rar.html https://rapidgator.net/file/3bd412b92bbd8b996bdac1293b03ba6b/A_deep_understanding_of_AI_large_language_model_mechanisms.part02.rar.html https://rapidgator.net/file/05107e98226ccd679944e07d16830c21/A_deep_understanding_of_AI_large_language_model_mechanisms.part03.rar.html https://rapidgator.net/file/ac8cd153bd9c5d8fbc271139e8523ce5/A_deep_understanding_of_AI_large_language_model_mechanisms.part04.rar.html https://rapidgator.net/file/92b3179e798ae984671aaa7b92479694/A_deep_understanding_of_AI_large_language_model_mechanisms.part05.rar.html https://rapidgator.net/file/3963e48838f959236488e13de095d7ec/A_deep_understanding_of_AI_large_language_model_mechanisms.part06.rar.html https://rapidgator.net/file/766ac192eb37f9ada214de613f80874a/A_deep_understanding_of_AI_large_language_model_mechanisms.part07.rar.html https://rapidgator.net/file/50a8b4bbb6ab4ebee8b7645ec9d913b1/A_deep_understanding_of_AI_large_language_model_mechanisms.part08.rar.html https://rapidgator.net/file/2d845ca751962d04dea8fa15eda5151d/A_deep_understanding_of_AI_large_language_model_mechanisms.part09.rar.html https://rapidgator.net/file/59e134d8a89528b87213d94dca21ff5d/A_deep_understanding_of_AI_large_language_model_mechanisms.part10.rar.html https://rapidgator.net/file/4bf2a3bc943c85307450b1286d9a31de/A_deep_understanding_of_AI_large_language_model_mechanisms.part11.rar.html https://rapidgator.net/file/0130bc21d4d6aeb2e68716b81b89155e/A_deep_understanding_of_AI_large_language_model_mechanisms.part12.rar.html https://rapidgator.net/file/d41856036345a7a2b972434dbc75272b/A_deep_understanding_of_AI_large_language_model_mechanisms.part13.rar.html https://rapidgator.net/file/b1fb4e70ce5af7e09ab3101794f866b5/A_deep_understanding_of_AI_large_language_model_mechanisms.part14.rar.html